Reasoning with AI

"Give an AI a fish, and it recognizes one fish. Teach an AI to fish, and it learns to identify countless fish in any context." -ChatGPT

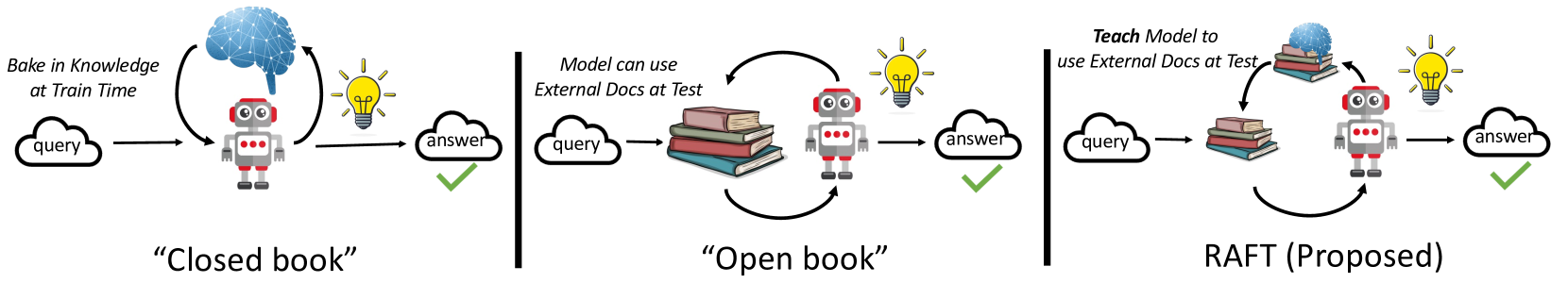

This clever adage came to mind when reading about Retrieval Augmented Fine Tuning (RAFT), a technique for extending the reasoning abilities of large language models (LLMs) by fine-tuning them with relevant data and Chain-of-Thought reasoning. More details below.

While I don’t know how to fine-tune a mode, I did test some prompting techniques, aiming to boost reasoning capability while upgrading a friend's Next.js website.

This week, my experience using AI assistants and AI-enabled features has me thinking about other ways regular companies might integrate AI into their customer experiences and products.

Keep reading to learn more.

The Research

The RAFT approach elevates an LLM's reasoning capabilities through fine-tuning with comprehensive reasoning chains and citations. Rather than simply memorizing facts, LLMs fine-tuned using RAFT show an impressive ability to filter out irrelevant data and reason their way to answers for questions they weren't explicitly trained on.

The study evaluated performance on five datasets spanning two knowledge domains (medical and coding) plus trivia. RAFT delivered gains across nearly all tests, sometimes drastically outperforming stronger models, RAG, and fine-tuning alone.

Use this technique when building AI solutions in domains rich with documentation that may be inherently incomplete or fail to cover every scenario.

Read on if you're curious about how you might influence reasoning in your day-to-day usage of AI tools.

Technique #1: Chain-of-Thought

Adding a think step-by-step or take your time to your prompt has been shown to get LLMs to break down a problem into parts, leading to more thoughtful responses. This best practice was identified back in 2022 when LLMs were just entering the mainstream. It's a straightforward way to boost results that I highlight in my Basic AI workshop.

Researchers have continued iterating on this technique –the Chain-of-Thought paper has amassed over 3,000 citations! I recently encountered another simple prompt that further elevates reasoning.

Technique #2: Plan-and-Solve

Plan-and-Solve goes further by instructing an LLM to react in two distinct phases - first devising a plan, then executing it. Integrate the following into your prompt:Let's first understand the problem and devise a plan to solve the problem. Then, let's carry out the plan and solve the problem step by step.

When working with variables or math, also add: extract relevant variables and their corresponding numerals and calculate intermediate results (pay attention to calculation and commonsense)

Research shows this technique enhances mathematical reasoning more than prompts without explicit reasoning examples (also called “zero-shot”).

This is merely a starting point. To further improve reasoning, use multiple prompts, focusing on identifying multiple plans. Then, provide a framework to assess feasibility and select an optimal approach. Lastly, execute the plan (that is, the original instruction).

My Experience

Updating my friend's website from Node 12 to Node 20 gave me an opportunity to test domain-specific reasoning capabilities.

First, I asked Github Copilot to generate multiple upgrade strategies, compare them, and provide a recommendation. While generic, the answer did a good job of contrasting incremental vs. direct upgrade approaches.

Copilot's ability to offer specific, helpful guidance for resolving library dependency issues was even more impressive. It analyzed my next.config.js file, suggested updates, and at each roadblock during the build step, Copilot explained the problem and provided solutions that I could insert into my file with one click. My experience with Next.js is limited, requiring me to relearn the technology each time I use it. However, after upgrading from 12 to 16, I discovered Copilot still uses gpt-3 😱 and lacks access to recent data. I completed the upgrade with a Next.js GPT running on gpt-4, but the experience wasn’t nearly as good.

The final step, of course, was deployment. Kudos to Netlify for their Generative AI integration within the build and deployment process. It detected deprecated Node 12 settings in my configuration. Their AI assistant went beyond explaining the error found in the build logs—it provided a solution and linked to relevant Next.js documentation.

The entire upgrade took just 2 hours without any grey hairs gained. A win in my book.

Final Thoughts

Netlify could have dismissed build issues as the customer's problem, a mere "user error." Instead, they made it easier for customers to succeed with their product. 🏆

AI-enabled products hold immense potential for enhancing the customer experience. Pinpointing user pain points can highlight opportunities to boost product value for many others. I believe AI isn't just for "AI products"; every product can improve with AI-powered features.

I'm eager to hear your thoughts. Have you considered how AI could improve the products you use?